Let’s see how to deploy IBM FileNet using OpenShift !

Before going to configure and deploy IBM Content Platforme Engine, there is some prerequisites to match:

- having a server with Docker and OpenShift installed (you can check my post related to this deployment here : https://blog.xoupix.fr/index.php/2020/04/28/installing-okd-on-centos/)

- you should retrieve IBM Content Platform Engine and IBM Content Navigator Docker container images from your IBM account;

Creating OpenShift project

First of all, let’s create an OpenShift project :

#!/bin/bash

function configure_openshift(){

# Creating new project

oc login -u ${OPENSHIFT_USER_NAME} -p ${OPENSHIFT_USER_PASSWORD}

oc new-project ${OPENSHIFT_PROJECT_NAME} --display-name="${OPENSHIFT_PROJECT_DISPLAY_NAME}" --description="${OPENSHIFT_PROJECT_DESCRIPTION}"

# Configuring project

oc login -u system:admin

oc patch namespace ${OPENSHIFT_PROJECT_NAME} -p '{"metadata": {"annotations": { "openshift.io/sa.scc.uid-range":"'${OPENSHIFT_BASE_USER_UID}'/10000" } } }'

oc patch namespace ${OPENSHIFT_PROJECT_NAME} -p '{"metadata": {"annotations": { "openshift.io/sa.scc.supplemental-groups":"'${OPENSHIFT_BASE_GROUP_UID}'/10000" } } }'

}

configure_openshift

Login to OpenShift using your user account and create a new project. Next, to run IBM Content Platform Engine and IBM Content Navigator as non-root uset, you should set “openshift.io/sa.scc.uid-range” and “openshift.io/sa.scc.supplemental-groups” according to the user you will configure on your system.

Pulling Docker images

Before deploying images on OpenShift, we will push them on Docker.

#!/bin/bash

function pull_ibm_images(){

# Installing Docker images from tar.gz archives

if [ -d ${IBM_DOCKER_ARCHIVES} ] ; then

echo -e "\e[92mPulling images from ${IBM_DOCKER_ARCHIVES}\033[0m"

# Loading OpenShift images to Docker

find ${IBM_DOCKER_ARCHIVES} -type f -name "*.tar.gz" -exec docker load -i {} \;

echo -e "\e[92mImages successfully pulled\033[0m"

fi

}

prerequisites ${1}

pull_ibm_images

Running Active Directory

In this specific case, I will run an OpenLDAP instance. Of course, if you are in an enterprise network, you will be able to connect to the principal LDAP server.

#!/bin/bash

function prerequisites(){

export i=0

}

function run_openldap(){

echo -e "\e[92mRunning ${OPENLDAP_CONTAINER_NAME} container\033[0m"

docker run -d -t --name=${OPENLDAP_CONTAINER_NAME} --add-host=$(hostname -f):$(ip -4 addr show docker0 | grep -Po 'inet \K[\d.]+') --restart=always -p ${OPENLDAP_LDAP_UNSECURED_PORT}:389 -p ${OPENLDAP_LDAP_HTTPPORT}:80 --env LDAP_BASE_DN=${OPENLDAP_BASE_DN} --env LDAP_DOMAIN=${OPENLDAP_DOMAIN} --env LDAP_ADMIN_PASSWORD=${OPENLDAP_ADMIN_PASSWORD} osixia/openldap:1.3.0 bash

while((${i}<${OPENLDAP_RETRY}*2))

do

isLDAPReady=$(docker logs ${OPENLDAP_CONTAINER_NAME} | grep "openldap")

if [[ "${isLDAPReady}" != "" ]]; then

echo "${OPENLDAP_CONTAINER_NAME} container started, check ldap service now."

isLDAPonLine=$(docker exec -i ${OPENLDAP_CONTAINER_NAME} service slapd status | grep running)

if [[ "${isLDAPonLine}" = "" ]]; then

echo "Need to restart LDAP service now."

docker exec -i ${OPENLDAP_CONTAINER_NAME} service slapd start

exit_script_if_error "docker exec -i ${OPENLDAP_CONTAINER_NAME} service slapd start"

docker exec -i ${OPENLDAP_CONTAINER_NAME} service slapd status

exit_script_if_error "docker exec -i ${OPENLDAP_CONTAINER_NAME} service slapd status"

break

else

echo -e "\e[92mLDAP service is ready.\033[0m"

break

fi

else

echo "$i. LDAP is not ready yet, wait 5 seconds and recheck again...."

sleep 5s

let i++

fi

done

echo -e "\e[92mAdding sample users and groups to LDAP\033[0m"

docker exec -i ${OPENLDAP_CONTAINER_NAME} bash <<EOF

echo "

dn: cn=P8Admin,dc=ecm,dc=ibm,dc=com

cn: P8Admin

sn: P8Admin

userpassword: password

objectclass: top

objectclass: organizationalPerson

objectclass: person

dn: cn=tester,dc=ecm,dc=ibm,dc=com

cn: tester

sn: tester

userpassword: password

objectclass: top

objectclass: organizationalPerson

objectclass: person

dn: cn=P8Admins,dc=ecm,dc=ibm,dc=com

objectclass: groupOfNames

objectclass: top

cn: P8Admins

member: cn=P8Admin,dc=ecm,dc=ibm,dc=com

dn: cn=GeneralUsers,dc=ecm,dc=ibm,dc=com

objectclass: groupOfNames

objectclass: top

cn: GeneralUsers

member: cn=P8Admin,dc=ecm,dc=ibm,dc=com

member: cn=tester,dc=ecm,dc=ibm,dc=com

">/tmp/ecm.ldif

echo "

dn: olcDatabase={1}mdb,cn=config

changetype: modify

replace: olcAccess

olcAccess: to * by * read

">/tmp/ecm_acc.ldif

ldapadd -x -D "cn=admin,dc=ecm,dc=ibm,dc=com" -w password -f /tmp/ecm.ldif

ldapmodify -Y EXTERNAL -Q -H ldapi:/// -f /tmp/ecm_acc.ldif

rm -f /tmp/ecm.ldif

EOF

echo -e "\e[92mLDAP is ready to be used !\033[0m"

}

prerequisites

run_openldap

Nothing particular here. Just running an OpenLDAP instance, waiting from it to be ready, then injecting some users and groups to use in IBM softwares.

Running DataBase

Same thing as OpenLDAP, in this specific case, I will run DB2 instance. Of course, if you are in an enterprise network, you will be able to connect to the principal DB server.

#!/bin/bash

function prerequisites(){

export i=0

}

function run_db2(){

echo -e "\e[92mCreating '${IBM_DB2_ROOT_DIR}', '${IBM_DB2_SCRIPT_DIR}' and '${IBM_DB2_STORAGE_DIR}' directories\033[0m"

mkdir -p ${IBM_DB2_ROOT_DIR} ${IBM_DB2_SCRIPT_DIR} ${IBM_DB2_STORAGE_DIR}

echo -e "\e[92mGenerating ${IBM_DB2_ROOT_DIR}/.config file\033[0m"

tee ${IBM_DB2_ROOT_DIR}/.config<<EOF

LICENSE=accept

DB2INSTANCE=${IBM_DB2_INST_NAME}

DB2INST1_PASSWORD=${IBM_DB2_INST_PASSWORD}

DBNAME=

BLU=false

ENABLE_ORACLE_COMPATIBILITY=false

UPDATEAVAIL=NO

TO_CREATE_SAMPLEDB=true

IS_OSXFS=false

BIND_HOME=true

REPODB=false

EOF

echo -e "\e[92mRunning ${IBM_DB2_CONTAINER_NAME} container\033[0m"

docker run -d -h db2server --name ${IBM_DB2_CONTAINER_NAME} --add-host=$(hostname -f):$(ip -4 addr show docker0 | grep -Po 'inet \K[\d.]+') --restart=always --privileged=true -p ${IBM_DB2_SERVER_PORT}:50000 --env-file ${IBM_DB2_ROOT_DIR}/.config -v ${IBM_DB2_SCRIPT_DIR}:/tmp/db2_script -v ${IBM_DB2_STORAGE_DIR}:/db2fs ibmcom/db2:11.5.0.0

while((${i}<${IBM_DB2_RETRY}*2))

do

isLDAPReady=$(docker logs ${IBM_DB2_CONTAINER_NAME} | grep "Setup has completed.")

if [[ "${isLDAPReady}" == "" ]]; then

echo "$i. DB2 is not ready yet, wait 5 seconds and recheck again...."

sleep 10s

let i++

else

echo -e "\e[92mDB2 service is ready.\033[0m"

break

fi

done

echo -e "\e[92mCopying scripts and datas to create DB2 tables\033[0m"

docker cp ${IBM_DB2_SCRIPTS}/DB2_ONE_SCRIPT.sql $(docker ps |grep "${IBM_DB2_CONTAINER_NAME}"|awk '{print $1}'):/database/config/db2inst1

docker cp ${IBM_DB2_SCRIPTS}/GCDDB.sh $(docker ps |grep "${IBM_DB2_CONTAINER_NAME}"|awk '{print $1}'):/database/config/db2inst1

docker cp ${IBM_DB2_SCRIPTS}/ICNDB.sh $(docker ps |grep "${IBM_DB2_CONTAINER_NAME}"|awk '{print $1}'):/database/config/db2inst1

docker cp ${IBM_DB2_SCRIPTS}/OS1DB.sh $(docker ps |grep "${IBM_DB2_CONTAINER_NAME}"|awk '{print $1}'):/database/config/db2inst1

docker cp ${IBM_DB2_SCRIPTS}/setup_db.sh $(docker ps -a|grep "ibm-db2"|awk '{print $1}'):/database/config/db2inst1

echo -e "\e[92mCreating DB2 tables\033[0m"

docker exec -i ${IBM_DB2_CONTAINER_NAME} /bin/bash /database/config/db2inst1/setup_db.sh

echo -e "\e[92mDB2 tables successfully created !\033[0m"

}

prerequisites

run_db2

Preparing IBM Content Platform Engine deployment

In order to be ready to deploy IBM Content Platform Engine on OpenShift, you will need some specific configuration.

Directories

According to the official documentation, and because I don’t know what you will use a functionalities, I will use 7 directories:

- cpecfgstore/cpe/configDropins/overrides

- cpelogstore/cpe/logs

- cpefilestore/asa

- cpetextextstore/textext

- cpebootstrapstore/bootstrap

- cpefnlogstore/FileNet

- cpeicmrulesstore/icmrules

The configuration files

The “cpecfgstore/cpe/configDropins/overrides” will store all the IBM Content Platform Engine configuration, as LDAP or DB configuration files or DB drivers.

The DB2JCCDriver.xml configuration file

Because I’m using a DB2 instance as main database, I need to specify the java library to use to connect to the instance.

<server>

<transaction totalTranLifetimeTimeout="300s" />

<library id="DB2JCC4Lib">

<fileset dir="${server.config.dir}/configDropins/overrides" includes="db2jcc4.jar db2jcc_license_cu.jar"/>

</library>

</server>

2 files are required in this specific scenario: db2jcc4.jar, the driver library, and the db2jcc_license_cu.jar, the license library.

The ldap_TDS.xml configuration file

This file specify the LDAP connection, the base Distinguished Name (dn) to use, the credentials to connect to the LDAP server, and some others informations related to the group or user filter.

<server>

<!-- Unique ID Attribute to use : entryUUID -->

<ldapRegistry id="MyTDS" realm="defaultRealm"

host="172.17.0.6"

baseDN="dc=ecm,dc=ibm,dc=com"

port="389"

ldapType="IBM Tivoli Directory Server"

bindDN="cn=P8Admin,dc=ecm,dc=ibm,dc=com"

sslEnabled="False"

bindPassword="password">

<idsFilters

userFilter="(&(cn=%v)(objectclass=person))"

groupFilter="(&(cn=%v)(|(objectclass=groupOfNames)(objectclass=groupOfUniqueNames)(objectclass=groupOfURLs)))"

userIdMap="*:cn"

groupIdMap="*:cn"

groupMemberIdMap="memberof:member">

</idsFilters>

</ldapRegistry>

</server>

The GCD.xml configuration file

This configuration file is the main file to specify FileNet GCD database instance, used to store the FileNet domain. This file will store all the configuration to use to create datasources on IBM Content Platform Engine Liberty when deploying the IBM Content Platform Engine container.

For each datasource (simple and XA datasource), there is few informations to provide:

- the datasource name (“id” and “jndiName” attributes)

- the libraries to use to interact with the DataBase (“jdbcDriver” node)

- the properties used to connect the DataBase (“properties.db2.jcc” node in this case), including:

- the DataBase name (“GCDDB”)

- the server name (can be both server name if resolved or server ip, “172.17.0.8”)

- the server port to reach the DataBase instance (“50000”)

- the DataBase user name (“db2inst1”)

- the DataBase user password (“password”)

With all those informations, IBM Content Platform Engine will be able to connect to the SQL instance holding the FileNet domain.

<server>

<dataSource id="FNGDDS" jndiName="FNGDDS" isolationLevel="TRANSACTION_READ_COMMITTED" type="javax.sql.DataSource">

<jdbcDriver libraryRef="DB2JCC4Lib"/>

<properties.db2.jcc

databaseName="GCDDB"

serverName="172.17.0.8"

portNumber="50000"

user="db2inst1"

password="password"

resultSetHoldability="HOLD_CURSORS_OVER_COMMIT"

/>

<connectionManager maxIdleTime="1m" maxPoolSize="50" minPoolSize="0" reapTime="2m" enableSharingForDirectLookups="false"/>

</dataSource>

<dataSource id="FNGDDSXA" jndiName="FNGDDSXA" isolationLevel="TRANSACTION_READ_COMMITTED" type="javax.sql.XADataSource" supplementalJDBCTrace="true">

<properties.db2.jcc

databaseName="GCDDB"

serverName="172.17.0.8"

portNumber="50000"

user="db2inst1"

password="password"

/>

<connectionManager maxIdleTime="1m" maxPoolSize="50" minPoolSize="0" reapTime="2m" enableSharingForDirectLookups="true"/>

<jdbcDriver libraryRef="DB2JCC4Lib"/>

</dataSource>

</server>

The OBJSTORE.xml configuration file

As done in the GCD.xml configuration file, the OBJSTORE.xml configuration file will provide same kind of properties. I will not list them again, it’s the same file structure as above (only values are changing).

<server>

<dataSource id="FNOSDS" jndiName="FNOSDS" isolationLevel="TRANSACTION_READ_COMMITTED" type="javax.sql.DataSource">

<jdbcDriver libraryRef="DB2JCC4Lib"/>

<properties.db2.jcc

databaseName="OS1DB"

serverName="172.17.0.8"

portNumber="50000"

user="db2inst1"

password="password"

resultSetHoldability="HOLD_CURSORS_OVER_COMMIT"

/>

<connectionManager maxIdleTime="1m" maxPoolSize="50" minPoolSize="0" reapTime="2m" enableSharingForDirectLookups="false"/>

</dataSource>

<dataSource id="FNOSDSXA" jndiName="FNOSDSXA" isolationLevel="TRANSACTION_READ_COMMITTED" type="javax.sql.XADataSource" supplementalJDBCTrace="true">

<properties.db2.jcc

databaseName="OS1DB"

serverName="172.17.0.8"

portNumber="50000"

user="db2inst1"

password="password"

/>

<connectionManager maxIdleTime="1m" maxPoolSize="50" minPoolSize="0" reapTime="2m" enableSharingForDirectLookups="true"/>

<jdbcDriver libraryRef="DB2JCC4Lib"/>

</dataSource>

</server>

Copying and specifying directories rights

Finally, you will have to copy all your configuration files into the “cpecfgstore/cpe/configDropins/overrides” directory, and setting all your directories rights to the appropriate user/group.

#!/bin/bash

function prepare_cpe(){

# Creating IBM Content Platform Engine directories

echo -e "\e[92mCreating IBM Content Platform Engine directories\033[0m"

mkdir -p ${IBM_CPE_CONFIG_DIR}/cpecfgstore/cpe/configDropins/overrides

mkdir -p ${IBM_CPE_CONFIG_DIR}/cpelogstore/cpe/logs

mkdir -p ${IBM_CPE_CONFIG_DIR}/cpefilestore/asa

mkdir -p ${IBM_CPE_CONFIG_DIR}/cpetextextstore/textext

mkdir -p ${IBM_CPE_CONFIG_DIR}/cpebootstrapstore/bootstrap

mkdir -p ${IBM_CPE_CONFIG_DIR}/cpefnlogstore/FileNet

mkdir -p ${IBM_CPE_CONFIG_DIR}/cpeicmrulesstore/icmrules

# Copying IBM Content Platform Engine configuration

echo -e "\e[92mCopying IBM Content Platform Engine configuration\033[0m"

cp -Rrf ${IBM_CPE_BASE_DIR}/config/CPE/custom/configDropins/overrides/* ${IBM_CPE_CONFIG_DIR}/cpecfgstore/cpe/configDropins/overrides

# Setting rights according to the OpenShift container user

echo -e "\e[92mSetting IBM Content Platform Engine rights on directories\033[0m"

chown -R ${IBM_CPE_CONTAINER_USER_ID}:${IBM_CPE_CONTAINER_GROUP_ID} ${IBM_CPE_CONFIG_DIR}/cpecfgstore

chown -R ${IBM_CPE_CONTAINER_USER_ID}:${IBM_CPE_CONTAINER_GROUP_ID} ${IBM_CPE_CONFIG_DIR}/cpelogstore

chown -R ${IBM_CPE_CONTAINER_USER_ID}:${IBM_CPE_CONTAINER_GROUP_ID} ${IBM_CPE_CONFIG_DIR}/cpefilestore

chown -R ${IBM_CPE_CONTAINER_USER_ID}:${IBM_CPE_CONTAINER_GROUP_ID} ${IBM_CPE_CONFIG_DIR}/cpetextextstore

chown -R ${IBM_CPE_CONTAINER_USER_ID}:${IBM_CPE_CONTAINER_GROUP_ID} ${IBM_CPE_CONFIG_DIR}/cpebootstrapstore

chown -R ${IBM_CPE_CONTAINER_USER_ID}:${IBM_CPE_CONTAINER_GROUP_ID} ${IBM_CPE_CONFIG_DIR}/cpefnlogstore

chown -R ${IBM_CPE_CONTAINER_USER_ID}:${IBM_CPE_CONTAINER_GROUP_ID} ${IBM_CPE_CONFIG_DIR}/cpeicmrulesstore

}

prepare_cpe

Deploying IBM Content Platform Engine

Creating persistent volumes

Before deploying the IBM Content Platform Engine image, you will need to declare some persistent volumes on OpenShift side (persistent volume and persistent volume claim).

Bootstrap volumes

This volume will be used for upgrade only.

apiVersion: v1

kind: PersistentVolume

metadata:

name: cpe-bootstrap-pv

labels:

type: local

spec:

accessModes:

- ReadWriteMany

capacity:

storage: 1Gi

hostPath:

path: /home/worker/cpebootstrapstore/

persistentVolumeReclaimPolicy: Retain

storageClassName: cpe-bootstrap-pv

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: cpe-bootstrap-pvc

namespace: dev

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: cpe-bootstrap-pv

volumeName: cpe-bootstrap-pv

status:

accessModes:

- ReadWriteMany

capacity:

storage: 1Gi

Cfgstore volumes

This volume will be used to store the IBM Content Platform Engine configuration files.

apiVersion: v1

kind: PersistentVolume

metadata:

name: cpe-cfgstore-pv

labels:

type: local

spec:

accessModes:

- ReadWriteMany

capacity:

storage: 1Gi

hostPath:

path: /home/worker/cpecfgstore/

persistentVolumeReclaimPolicy: Retain

storageClassName: cpe-cfgstore-pv

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: cpe-cfgstore-pvc

namespace: dev

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: cpe-cfgstore-pv

volumeName: cpe-cfgstore-pv

status:

accessModes:

- ReadWriteMany

capacity:

storage: 1Gi

Filestore volumes

This volume will be used as file store or system-based advanced storage.

apiVersion: v1

kind: PersistentVolume

metadata:

name: cpe-filestore-pv

labels:

type: local

spec:

accessModes:

- ReadWriteMany

capacity:

storage: 1Gi

hostPath:

path: /home/worker/cpefilestore/

persistentVolumeReclaimPolicy: Retain

storageClassName: cpe-filestore-pv

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: cpe-filestore-pvc

namespace: dev

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: cpe-filestore-pv

volumeName: cpe-filestore-pv

status:

accessModes:

- ReadWriteMany

capacity:

storage: 1Gi

FileNet Log store volumes

This volume will be used to store IBM Content Platform Engine logs.

apiVersion: v1

kind: PersistentVolume

metadata:

name: cpe-fnlogstore-pv

labels:

type: local

spec:

accessModes:

- ReadWriteMany

capacity:

storage: 1Gi

hostPath:

path: /home/worker/cpefnlogstore/

persistentVolumeReclaimPolicy: Retain

storageClassName: cpe-fnlogstore-pv

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: cpe-fnlogstore-pvc

namespace: dev

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: cpe-fnlogstore-pv

volumeName: cpe-fnlogstore-pv

status:

accessModes:

- ReadWriteMany

capacity:

storage: 1Gi

ICM Rules volumes

This volume will be used to store IBM Case Manager Rules.

apiVersion: v1

kind: PersistentVolume

metadata:

name: cpe-icmrules-pv

labels:

type: local

spec:

accessModes:

- ReadWriteMany

capacity:

storage: 1Gi

hostPath:

path: /home/worker/cpeicmrulesstore/

persistentVolumeReclaimPolicy: Retain

storageClassName: cpe-icmrules-pv

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: cpe-icmrules-pvc

namespace: dev

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: cpe-icmrules-pv

volumeName: cpe-icmrules-pv

status:

accessModes:

- ReadWriteMany

capacity:

storage: 1Gi

Log store volumes

This volume will be used to store IBM Content Platform Engine Liberty logs.

apiVersion: v1

kind: PersistentVolume

metadata:

name: cpe-logstore-pv

labels:

type: local

spec:

accessModes:

- ReadWriteMany

capacity:

storage: 1Gi

hostPath:

path: /home/worker/cpelogstore/

persistentVolumeReclaimPolicy: Retain

storageClassName: cpe-logstore-pv

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: cpe-logstore-pvc

namespace: dev

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: cpe-logstore-pv

volumeName: cpe-logstore-pv

status:

accessModes:

- ReadWriteMany

capacity:

storage: 1Gi

Text ext volumes

This volume will be used as a temporary working space.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: cpe-textext-pvc

namespace: dev

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: cpe-textext-pv

volumeName: cpe-textext-pv

status:

accessModes:

- ReadWriteMany

capacity:

storage: 1Gi

apiVersion: v1

kind: PersistentVolume

metadata:

name: cpe-textext-pv

labels:

type: local

spec:

accessModes:

- ReadWriteMany

capacity:

storage: 1Gi

hostPath:

path: /home/worker/cpetextextstore/

persistentVolumeReclaimPolicy: Retain

storageClassName: cpe-textext-pv

Pushing IBM images to OpenShift Docker registry

Before deploying IBM Content Platform Engine on OpenShift, you must push your Docker images to the OpenShift Docker registry. This is done using the following command lines.

echo -e "\e[92mPushing IBM Content Platform Engine image to OpenShift\033[0m"

oc login -u ${OPENSHIFT_USER_NAME} -p ${OPENSHIFT_USER_PASSWORD}

oc project ${OPENSHIFT_PROJECT_NAME}

docker login -u ${OPENSHIFT_USER_NAME} -p $(oc whoami -t) ${OPENSHIFT_REGISTRY_URL}

docker tag cpe:ga-553-p8cpe ${OPENSHIFT_REGISTRY_URL}/${OPENSHIFT_PROJECT_NAME}/cpe:ga-553-p8cpe

docker push ${OPENSHIFT_REGISTRY_URL}/${OPENSHIFT_PROJECT_NAME}/cpe:ga-553-p8cpe

docker logout ${OPENSHIFT_REGISTRY_URL}

Defining the IBM Content Platform Engine deployment configuration file

In the deployment configuration file, you will specify the OpenShift service to create, and the deployment profile (used to create a pod). There is a lot of editable configuration, as the capacity to create dynamic entries in the /etc/hosts file, specifying the user id to use when running the container, or the ports to deploy. All this configuration is related to your environment.

apiVersion: v1

kind: Service

metadata:

name: ecm-cpe-svc

spec:

ports:

- name: http

protocol: TCP

port: 9080

targetPort: 9080

- name: https

protocol: TCP

port: 9443

targetPort: 9443

selector:

app: cpeserver-cluster1

type: NodePort

sessionAffinity: ClientIP

---

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: ecm-cpe

spec:

replicas: 1

strategy:

type: RollingUpdate

template:

metadata:

labels:

app: cpeserver-cluster1

spec:

imagePullSecrets:

- name: admin.registrykey

spec:

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- cpeserver-cluster1

topologyKey: "kubernetes.io/hostname"

# Adding specific network aliases

hostAliases:

# resolving ldap hostname

- ip: "172.17.0.6"

hostnames:

- "ldap"

# Resolving db2 hostname

- ip: "172.17.0.8"

hostnames:

- "db2"

containers:

- image: 172.30.1.1:5000/dev/cpe:ga-553-p8cpe

imagePullPolicy: Always

name: ecm-cpe

# Specifying security context

securityContext:

# Running container as 50001

runAsUser: 50001

allowPrivilegeEscalation: false

resources:

requests:

memory: 512Mi

# 1 core = 1000 milicores = 1000m

# 500m = half core

cpu: 500m

limits:

memory: 1024Mi

cpu: 1

ports:

- containerPort: 9080

name: http

- containerPort: 9443

name: https

env:

- name: LICENSE

value: "accept"

- name: CPESTATICPORT

value: "false"

- name: CONTAINERTYPE

value: "1"

- name: TZ

value: "Etc/UTC"

- name: JVM_HEAP_XMS

value: "512m"

- name: JVM_HEAP_XMX

value: "1024m"

- name: GCDJNDINAME

value: "FNGDDS"

- name: GCDJNDIXANAME

value: "FNGDDSXA"

- name: MY_POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: MY_POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: MY_POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

# Application initialization complet

readinessProbe:

httpGet:

path: /P8CE/Health

port: 9080

httpHeaders:

- name: Content-Encoding

value: gzip

initialDelaySeconds: 180

periodSeconds: 5

# Application is available

livenessProbe:

httpGet:

path: /P8CE/Health

port: 9080

httpHeaders:

- name: Content-Encoding

value: gzip

initialDelaySeconds: 600

periodSeconds: 5

volumeMounts:

- name: cpe-cfgstore-pvc

mountPath: "/opt/ibm/wlp/usr/servers/defaultServer/configDropins/overrides"

subPath: cpe/configDropins/overrides

- name: cpe-logstore-pvc

mountPath: "/opt/ibm/wlp/usr/servers/defaultServer/logs"

subPath: cpe/logs

- name: cpe-filestore-pvc

mountPath: "/opt/ibm/asa"

subPath: asa

- name: cpe-icmrules-pvc

mountPath: "/opt/ibm/icmrules"

subPath: icmrules

- name: cpe-textext-pvc

mountPath: /opt/ibm/textext

subPath: textext

- name: cpe-bootstrap-pvc

mountPath: "/opt/ibm/wlp/usr/servers/defaultServer/lib/bootstrap"

subPath: bootstrap

- name: cpe-fnlogstore-pvc

mountPath: "/opt/ibm/wlp/usr/servers/defaultServer/FileNet"

subPath: FileNet

volumes:

- name: cpe-cfgstore-pvc

persistentVolumeClaim:

claimName: "cpe-cfgstore-pvc"

- name: cpe-logstore-pvc

persistentVolumeClaim:

claimName: "cpe-logstore-pvc"

- name: cpe-filestore-pvc

persistentVolumeClaim:

claimName: "cpe-filestore-pvc"

- name: cpe-icmrules-pvc

persistentVolumeClaim:

claimName: "cpe-icmrules-pvc"

- name: cpe-textext-pvc

persistentVolumeClaim:

claimName: "cpe-textext-pvc"

- name: cpe-bootstrap-pvc

persistentVolumeClaim:

claimName: "cpe-bootstrap-pvc"

- name: cpe-fnlogstore-pvc

persistentVolumeClaim:

claimName: "cpe-fnlogstore-pvc"

Defining the route to create to reach your IBM Content Platform Engine cluster

Finally, you will have to declare a route to access your IBM Content Platform Engine instance. To do this, you can use the following yaml file (make sure that you modified the “metadata.name”, “metadata.namespace” and “spec.to.name” values to match your environment profile).

apiVersion: route.openshift.io/v1

kind: Route

metadata:

annotations:

openshift.io/host.generated: 'true'

name: my-route

namespace: dev

spec:

port:

targetPort: http

to:

kind: Service

name: ecm-cpe-svc

weight: 100

wildcardPolicy: None

# TLS termination

tls:

# edge, passthrough or reencrypt

termination: edge

# Allow http connections

insecureEdgeTerminationPolicy: Allow

The whole script

#!/bin/bash

function prerequisites(){

# OpenShift Docker Registry

export OPENSHIFT_REGISTRY_URL=$(docker exec $(docker ps --format "{{.Names}}" | grep k8s_registry_docker-registry) env | grep DOCKER_REGISTRY_PORT_5000_TCP_ADDR | cut -d'=' -f2):$(docker exec $(docker ps --format "{{.Names}}" | grep k8s_registry_docker-registry) env | grep DOCKER_REGISTRY_PORT_5000_TCP_PORT | cut -d'=' -f2)

}

function run_cpe(){

# Creating Persistent Volumes

echo -e "\e[92mCreating IBM Content Platform Engine persistent volumes\033[0m"

oc login -u system:admin

oc apply -f ${IBM_CPE_BASE_DIR}/persistent-volumes/cpe/cpe-bootstrap-pv.yaml ; oc apply -f ${IBM_CPE_BASE_DIR}/persistent-volumes/cpe/cpe-bootstrap-pvc.yaml

oc apply -f ${IBM_CPE_BASE_DIR}/persistent-volumes/cpe/cpe-cfgstore-pv.yaml ; oc apply -f ${IBM_CPE_BASE_DIR}/persistent-volumes/cpe/cpe-cfgstore-pvc.yaml

oc apply -f ${IBM_CPE_BASE_DIR}/persistent-volumes/cpe/cpe-filestore-pv.yaml ; oc apply -f ${IBM_CPE_BASE_DIR}/persistent-volumes/cpe/cpe-filestore-pvc.yaml

oc apply -f ${IBM_CPE_BASE_DIR}/persistent-volumes/cpe/cpe-fnlogstore-pv.yaml ; oc apply -f ${IBM_CPE_BASE_DIR}/persistent-volumes/cpe/cpe-fnlogstore-pvc.yaml

oc apply -f ${IBM_CPE_BASE_DIR}/persistent-volumes/cpe/cpe-icmrules-pv.yaml ; oc apply -f ${IBM_CPE_BASE_DIR}/persistent-volumes/cpe/cpe-icmrules-pvc.yaml

oc apply -f ${IBM_CPE_BASE_DIR}/persistent-volumes/cpe/cpe-logstore-pv.yaml ; oc apply -f ${IBM_CPE_BASE_DIR}/persistent-volumes/cpe/cpe-logstore-pvc.yaml

oc apply -f ${IBM_CPE_BASE_DIR}/persistent-volumes/cpe/cpe-textext-pv.yaml ; oc apply -f ${IBM_CPE_BASE_DIR}/persistent-volumes/cpe/cpe-textext-pvc.yaml

# Adding IBM Content Platform Engine to OpenShift Docker registry

echo -e "\e[92mPushing IBM Content Platform Engine image to OpenShift\033[0m"

oc login -u ${OPENSHIFT_USER_NAME} -p ${OPENSHIFT_USER_PASSWORD}

oc project ${OPENSHIFT_PROJECT_NAME}

docker login -u ${OPENSHIFT_USER_NAME} -p $(oc whoami -t) ${OPENSHIFT_REGISTRY_URL}

docker tag cpe:ga-553-p8cpe ${OPENSHIFT_REGISTRY_URL}/${OPENSHIFT_PROJECT_NAME}/cpe:ga-553-p8cpe

docker push ${OPENSHIFT_REGISTRY_URL}/${OPENSHIFT_PROJECT_NAME}/cpe:ga-553-p8cpe

docker logout ${OPENSHIFT_REGISTRY_URL}

# Deploying IBM Content Platform Engine

echo -e "\e[92mCustomizing IBM Content Platform Engine deployment file\033[0m"

cp -p ${IBM_CPE_BASE_DIR}/deploys/generic-cpe-deploy.yml ${IBM_CPE_BASE_DIR}/deploys/cpe-deploy.yml

DB_IP=$(docker inspect -f '{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}' ibm-db2)

sed -i "s/@DB_IP@/${DB_IP}/g" ${IBM_CPE_BASE_DIR}/deploys/cpe-deploy.yml

LDAP_IP=$(docker inspect -f '{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}' ldap)

sed -i "s/@LDAP_IP@/${LDAP_IP}/g" ${IBM_CPE_BASE_DIR}/deploys/cpe-deploy.yml

echo -e "\e[92mDeploying IBM Content Platform Engine\033[0m"

oc create -f ${IBM_CPE_BASE_DIR}/deploys/cpe-deploy.yml

# Creating route

echo -e "\e[92mCreating IBM Content Platform Engine route\033[0m"

oc create -f ${IBM_CPE_BASE_DIR}/route/cpe-route.yaml

}

prerequisites

run_cpe

Preparing IBM Content Navigator deployment

As done for IBM Content Platform Engine, IBM Content Navigator required some directories to work correctly.

- icncfgstore/cpe/icn/configDropins/overrides

- icnlogstore/cpe/logs

- icnpluginstore/plugins

- icnvwcachestore/viewercache

- icnvwlogstore/viewerlogs

The configuration files

The “icnfgstore/icn/configDropins/overrides” will store all the IBM Content Navigator configuration, as LDAP or DB configuration files or DB drivers, as it was done for IBM Content Platform Engine.

The DB2JCCDriver.xml configuration file

Same configuration as it was previously done for IBM Content Platform Engine, and because I’m using a DB2 instance, I also need to specify the java library to use to connect to the instance.

<server>

<transaction totalTranLifetimeTimeout="300s" />

<library id="DB2JCC4Lib">

<fileset dir="${server.config.dir}/configDropins/overrides" includes="db2jcc4.jar db2jcc_license_cu.jar"/>

</library>

</server>

2 files are required in this specific scenario: db2jcc4.jar, the driver library, and the db2jcc_license_cu.jar, the license library.

The ldap_TDS.xml configuration file

I’m also still using OpenLDAP as principal LDAP, so the configuration is the same that I already used for IBM Content Platform Engine.

<server>

<ldapRegistry id="MyTDS" realm="defaultRealm"

host="ldap"

baseDN="dc=ecm,dc=ibm,dc=com"

port="389"

ldapType="IBM Tivoli Directory Server"

bindDN="cn=P8Admin,dc=ecm,dc=ibm,dc=com"

sslEnabled="False"

bindPassword="password">

<idsFilters

userFilter="(&(cn=%v)(objectclass=person))"

groupFilter="(&(cn=%v)(|(objectclass=groupOfNames)(objectclass=groupOfUniqueNames)(objectclass=groupOfURLs)))"

userIdMap="*:cn"

groupIdMap="*:cn"

groupMemberIdMap="memberof:member">

</idsFilters>

</ldapRegistry>

</server>

The ICNDS.xml configuration file

As done for the GCD or ObjectStore configuration file, the ICN datasource should be defined to ensure the connection to DB2 from IBM Content Navigator.

<server> <dataSource id="ECMClientDS" jndiName="ECMClientDS" isolationLevel="TRANSACTION_READ_COMMITTED" type="javax.sql.DataSource"> <jdbcDriver libraryRef="DB2JCC4Lib"/> <properties.db2.jcc databaseName="ICNDB" serverName="db2" portNumber="50000" user="db2inst1" password="password" resultSetHoldability="HOLD_CURSORS_OVER_COMMIT" /> <!-- connectionManager globalConnectionTypeOverride="unshared" / --> </dataSource> </server>

Only a non XA datasource must be defined, all related to the DB2 configuration (database name, DB2 server name or IP, DB2 instance port, and DB2 credentials).

COPYING AND SPECIFYING DIRECTORIES RIGHTS

Finally, you will have to copy all your configuration files into the “icncfgstore/icn/configDropins/overrides” directory, and setting all your directories rights to the appropriate user/group.

#!/bin/bash

function prepare_icn(){

# Creating IBM Content Platform Engine directories

echo -e "\e[92mCreating IBM Content Navigator directories\033[0m"

mkdir -p ${IBM_ICN_CONFIG_DIR}/icncfgstore/icn/configDropins/overrides/

mkdir -p ${IBM_ICN_CONFIG_DIR}/icnlogstore/logs

mkdir -p ${IBM_ICN_CONFIG_DIR}/icnpluginstore/plugins

mkdir -p ${IBM_ICN_CONFIG_DIR}/icnvwcachestore/viewercache

mkdir -p ${IBM_ICN_CONFIG_DIR}/icnvwlogstore/viewerlogs

# Copying IBM Content Platform Engine configuration

echo -e "\e[92mCopying IBM Content Navigator configuration\033[0m"

cp -Rrf ${IBM_ICN_BASE_DIR}/config/ICN/custom/configDropins/overrides/* ${IBM_ICN_CONFIG_DIR}/icncfgstore/icn/configDropins/overrides

# Setting rights according to the OpenShift container user

echo -e "\e[92mSetting IBM Content Navigator rights on directories\033[0m"

chown -R ${IBM_ICN_CONTAINER_USER_ID}:${IBM_ICN_CONTAINER_GROUP_ID} ${IBM_ICN_CONFIG_DIR}/icncfgstore

chown -R ${IBM_ICN_CONTAINER_USER_ID}:${IBM_ICN_CONTAINER_GROUP_ID} ${IBM_ICN_CONFIG_DIR}/icnlogstore

chown -R ${IBM_ICN_CONTAINER_USER_ID}:${IBM_ICN_CONTAINER_GROUP_ID} ${IBM_ICN_CONFIG_DIR}/icnpluginstore

chown -R ${IBM_ICN_CONTAINER_USER_ID}:${IBM_ICN_CONTAINER_GROUP_ID} ${IBM_ICN_CONFIG_DIR}/icnvwcachestore

chown -R ${IBM_ICN_CONTAINER_USER_ID}:${IBM_ICN_CONTAINER_GROUP_ID} ${IBM_ICN_CONFIG_DIR}/icnvwlogstore

}

prepare_icn

Deploying IBM Content Navigator

IBM Content Navigator need some persistent volumes and persistent volume claims to be deployed.

CREATING PERSISTENT VOLUMES

Cfgstore volumes

This volume will be used to store the IBM Content Navigator configuration files.

apiVersion: v1

kind: PersistentVolume

metadata:

name: icn-cfgstore-pv

labels:

type: local

spec:

accessModes:

- ReadWriteMany

capacity:

storage: 1Gi

hostPath:

path: /home/worker/icncfgstore/

persistentVolumeReclaimPolicy: Retain

storageClassName: icn-cfgstore-pv

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: icn-cfgstore-pvc

namespace: dev

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: icn-cfgstore-pv

volumeName: icn-cfgstore-pv

status:

accessModes:

- ReadWriteMany

capacity:

storage: 1Gi

Log store volumes

This volume will be used to store IBM Content Navigator and Liberty logs.

apiVersion: v1

kind: PersistentVolume

metadata:

name: icn-logstore-pv

labels:

type: local

spec:

accessModes:

- ReadWriteMany

capacity:

storage: 1Gi

hostPath:

path: /home/worker/icnlogstore/

persistentVolumeReclaimPolicy: Retain

storageClassName: icn-logstore-pv

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: icn-logstore-pvc

namespace: dev

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: icn-logstore-pv

volumeName: icn-logstore-pv

status:

accessModes:

- ReadWriteMany

capacity:

storage: 1Gi

Plugin store volumes

This volume will be used to store IBM Content Navigator plugins.

apiVersion: v1

kind: PersistentVolume

metadata:

name: icn-pluginstore-pv

labels:

type: local

spec:

accessModes:

- ReadWriteMany

capacity:

storage: 1Gi

hostPath:

path: /home/worker/icnpluginstore/

persistentVolumeReclaimPolicy: Retain

storageClassName: icn-pluginstore-pv

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: icn-pluginstore-pvc

namespace: dev

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: icn-pluginstore-pv

volumeName: icn-pluginstore-pv

status:

accessModes:

- ReadWriteMany

capacity:

storage: 1Gi

Viewer log volumes

This volume will be used to store IBM Content Navigator viewer logs.

apiVersion: v1

kind: PersistentVolume

metadata:

name: icn-vwlogstore-pv

labels:

type: local

spec:

accessModes:

- ReadWriteMany

capacity:

storage: 1Gi

hostPath:

path: /home/worker/icnvwlogstore/

persistentVolumeReclaimPolicy: Retain

storageClassName: icn-vwlogstore-pv

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: icn-vwlogstore-pvc

namespace: dev

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: icn-vwlogstore-pv

volumeName: icn-vwlogstore-pv

status:

accessModes:

- ReadWriteMany

capacity:

storage: 1Gi

Viewer cache volumes

This volume will be used to store IBM Content Navigator viewer cache.

apiVersion: v1

kind: PersistentVolume

metadata:

name: icn-vwcachestore-pv

labels:

type: local

spec:

accessModes:

- ReadWriteMany

capacity:

storage: 1Gi

hostPath:

path: /home/worker/icnvwcachestore/

persistentVolumeReclaimPolicy: Retain

storageClassName: icn-vwcachestore-pv

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: icn-vwcachestore-pvc

namespace: dev

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: icn-vwcachestore-pv

volumeName: icn-vwcachestore-pv

status:

accessModes:

- ReadWriteMany

capacity:

storage: 1Gi

PUSHING IBM IMAGES TO OPENSHIFT DOCKER REGISTRY

As done for IBM Content Platform Engine, you must push IBM Content Navigator images to the OpenShift Docker registry. This is done using the following command lines.

oc login -u ${OPENSHIFT_USER_NAME} -p ${OPENSHIFT_USER_PASSWORD}

oc project ${OPENSHIFT_PROJECT_NAME}

docker login -u ${OPENSHIFT_USER_NAME} -p $(oc whoami -t) ${OPENSHIFT_REGISTRY}

docker tag navigator:ga-307-icn ${OPENSHIFT_REGISTRY}/${OPENSHIFT_PROJECT_NAME}/navigator:ga-307-icn

docker push ${OPENSHIFT_REGISTRY}/${OPENSHIFT_PROJECT_NAME}/navigator:ga-307-icn

docker logout ${OPENSHIFT_REGISTRY}

DEFINING THE IBM CONTENT PLATFORM ENGINE DEPLOYMENT CONFIGURATION FILE

As done previously for IBM Content Platform Engine, prepare the IBM Content Navigator deployment file.

apiVersion: v1

kind: Service

metadata:

name: ecm-icn-svc

spec:

ports:

- name: http

protocol: TCP

port: 9080

targetPort: 9080

- name: https

protocol: TCP

port: 9443

targetPort: 9443

- name: metrics

protocol: TCP

port: 9103

targetPort: 9103

selector:

app: icnserver-cluster1

type: NodePort

sessionAffinity: ClientIP

---

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: ecm-icn

spec:

replicas: 1

strategy:

type: RollingUpdate

template:

metadata:

labels:

app: icnserver-cluster1

spec:

imagePullSecrets:

- name: admin.registrykey

spec:

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- icnserver-cluster1

topologyKey: "kubernetes.io/hostname"

# Adding specific network aliases

hostAliases:

# resolving ldap hostname

- ip: "172.17.0.6"

hostnames:

- "ldap"

# Resolving db2 hostname

- ip: "172.17.0.8"

hostnames:

- "db2"

containers:

- image: 172.30.1.1:5000/dev/navigator:ga-307-icn

imagePullPolicy: Always

name: ecm-icn

securityContext:

runAsUser: 50001

allowPrivilegeEscalation: false

resources:

requests:

memory: 512Mi

cpu: 500m

limits:

memory: 1024Mi

cpu: 1

ports:

- containerPort: 9080

name: http

- containerPort: 9443

name: https

- containerPort: 9103

name: metrics

env:

- name: LICENSE

value: "accept"

- name: JVM_HEAP_XMS

value: "512m"

- name: JVM_HEAP_XMX

value: "1024m"

- name: TZ

value: "Etc/UTC"

- name: ICNDBTYPE

value: "db2"

- name: ICNJNDIDS

value: "ECMClientDS"

- name: ICNSCHEMA

value: "ICNDB"

- name: ICNTS

value: "ICNDB"

- name: MY_POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: MY_POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: MY_POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

readinessProbe:

httpGet:

path: /navigator

port: 9080

httpHeaders:

- name: Content-Encoding

value: gzip

initialDelaySeconds: 180

periodSeconds: 5

livenessProbe:

httpGet:

path: /navigator

port: 9080

httpHeaders:

- name: Content-Encoding

value: gzip

initialDelaySeconds: 600

periodSeconds: 5

volumeMounts:

- name: icncfgstore-pvc

mountPath: "/opt/ibm/wlp/usr/servers/defaultServer/configDropins/overrides"

subPath: icn/configDropins/overrides

- name: icnlogstore-pvc

mountPath: "/opt/ibm/wlp/usr/servers/defaultServer/logs"

subPath: logs

- name: icnpluginstore-pvc

mountPath: "/opt/ibm/plugins"

subPath: plugins

- name: icnvwcachestore-pvc

mountPath: "/opt/ibm/viewerconfig/cache"

subPath: viewercache

- name: icnvwlogstore-pvc

mountPath: "/opt/ibm/viewerconfig/logs"

subPath: viewerlogs

volumes:

- name: icncfgstore-pvc

persistentVolumeClaim:

claimName: "icn-cfgstore-pvc"

- name: icnlogstore-pvc

persistentVolumeClaim:

claimName: "icn-logstore-pvc"

- name: icnpluginstore-pvc

persistentVolumeClaim:

claimName: "icn-pluginstore-pvc"

- name: icnvwcachestore-pvc

persistentVolumeClaim:

claimName: "icn-vwcachestore-pvc"

- name: icnvwlogstore-pvc

persistentVolumeClaim:

claimName: "icn-vwlogstore-pvc"

DEFINING THE ROUTE TO CREATE TO REACH YOUR IBM CONTENT PLATFORM ENGINE CLUSTER

Creating the route to access the IBM Content Navigator cluster.

apiVersion: route.openshift.io/v1

kind: Route

metadata:

annotations:

openshift.io/host.generated: 'true'

name: my-icn-route

namespace: dev

spec:

port:

targetPort: http

to:

kind: Service

name: ecm-icn-svc

weight: 100

wildcardPolicy: None

# TLS termination

tls:

# edge, passthrough or reencrypt

termination: edge

# Allow http connections

insecureEdgeTerminationPolicy: Allow

THE WHOLE SCRIPT

#!/bin/bash

function prerequisites(){

# OpenShift Docker Registry

export OPENSHIFT_REGISTRY=$(docker exec $(docker ps --format "{{.Names}}" | grep k8s_registry_docker-registry) env | grep DOCKER_REGISTRY_PORT_5000_TCP_ADDR | cut -d'=' -f2):$(docker exec $(docker ps --format "{{.Names}}" | grep k8s_registry_docker-registry) env | grep DOCKER_REGISTRY_PORT_5000_TCP_PORT | cut -d'=' -f2)

}

function run_icn(){

# Creating Persistent Volumes

echo -e "\e[92mCreating IBM Content Navigator persistent volumes\033[0m"

oc login -u system:admin

oc apply -f ${IBM_ICN_BASE_DIR}/persistent-volumes/icn/icn-cfgstore-pv.yaml ; oc apply -f ${IBM_ICN_BASE_DIR}/persistent-volumes/icn/icn-cfgstore-pvc.yaml

oc apply -f ${IBM_ICN_BASE_DIR}/persistent-volumes/icn/icn-logstore-pv.yaml ; oc apply -f ${IBM_ICN_BASE_DIR}/persistent-volumes/icn/icn-logstore-pvc.yaml

oc apply -f ${IBM_ICN_BASE_DIR}/persistent-volumes/icn/icn-pluginstore-pv.yaml ; oc apply -f ${IBM_ICN_BASE_DIR}/persistent-volumes/icn/icn-pluginstore-pvc.yaml

oc apply -f ${IBM_ICN_BASE_DIR}/persistent-volumes/icn/icn-vwcachestore-pv.yaml ; oc apply -f ${IBM_ICN_BASE_DIR}/persistent-volumes/icn/icn-vwcachestore-pvc.yaml

oc apply -f ${IBM_ICN_BASE_DIR}/persistent-volumes/icn/icn-vwlogstore-pv.yaml ; oc apply -f ${IBM_ICN_BASE_DIR}/persistent-volumes/icn/icn-vwlogstore-pvc.yaml

# Adding IBM Content Platform Engine to OpenShift Docker registry

echo -e "\e[92mPushing IBM Content Navigator image to OpenShift\033[0m"

oc login -u ${OPENSHIFT_USER_NAME} -p ${OPENSHIFT_USER_PASSWORD}

oc project ${OPENSHIFT_PROJECT_NAME}

docker login -u ${OPENSHIFT_USER_NAME} -p $(oc whoami -t) ${OPENSHIFT_REGISTRY}

docker tag navigator:ga-307-icn ${OPENSHIFT_REGISTRY}/${OPENSHIFT_PROJECT_NAME}/navigator:ga-307-icn

docker push ${OPENSHIFT_REGISTRY}/${OPENSHIFT_PROJECT_NAME}/navigator:ga-307-icn

docker logout ${OPENSHIFT_REGISTRY}

# Deploying IBM Content Platform Engine

echo -e "\e[92mCustomizing IBM Content Navigator deployment file\033[0m"

cp -p ${IBM_ICN_BASE_DIR}/deploys/generic-icn-deploy.yml ${IBM_ICN_BASE_DIR}/deploys/icn-deploy.yml

DB_IP=$(docker inspect -f '{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}' ibm-db2)

sed -i "s/@DB_IP@/${DB_IP}/g" ${IBM_ICN_BASE_DIR}/deploys/icn-deploy.yml

LDAP_IP=$(docker inspect -f '{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}' ldap)

sed -i "s/@LDAP_IP@/${LDAP_IP}/g" ${IBM_ICN_BASE_DIR}/deploys/icn-deploy.yml

sed -i "s/@OPENSHIFT_REGISTRY@/${OPENSHIFT_REGISTRY}/g" ${IBM_ICN_BASE_DIR}/deploys/icn-deploy.yml

sed -i "s/@OPENSHIFT_PROJECT_NAME@/${OPENSHIFT_PROJECT_NAME}/g" ${IBM_ICN_BASE_DIR}/deploys/icn-deploy.yml

echo -e "\e[92mDeploying IBM Content Navigator\033[0m"

oc create -f ${IBM_ICN_BASE_DIR}/deploys/icn-deploy.yml

# Creating route

echo -e "\e[92mCreating IBM Content Navigator route\033[0m"

oc create -f ${IBM_ICN_BASE_DIR}/route/icn-route.yaml

}

prerequisites

run_icn

The result

Finally, what was created using those scripts ?

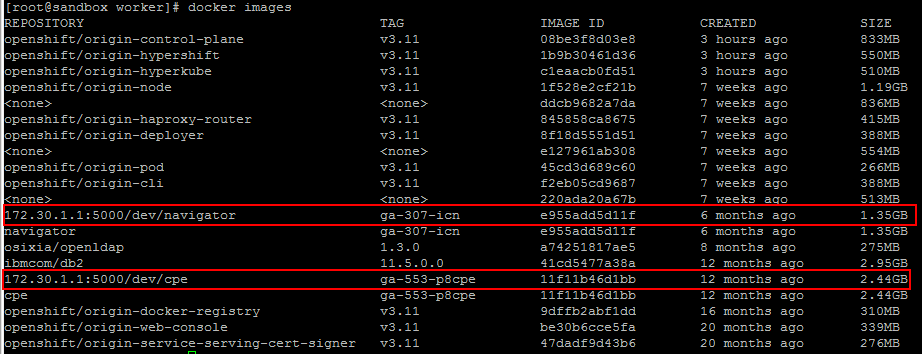

OpenShift Docker Registry

Images were successfully loaded on OpenShift docker registry.

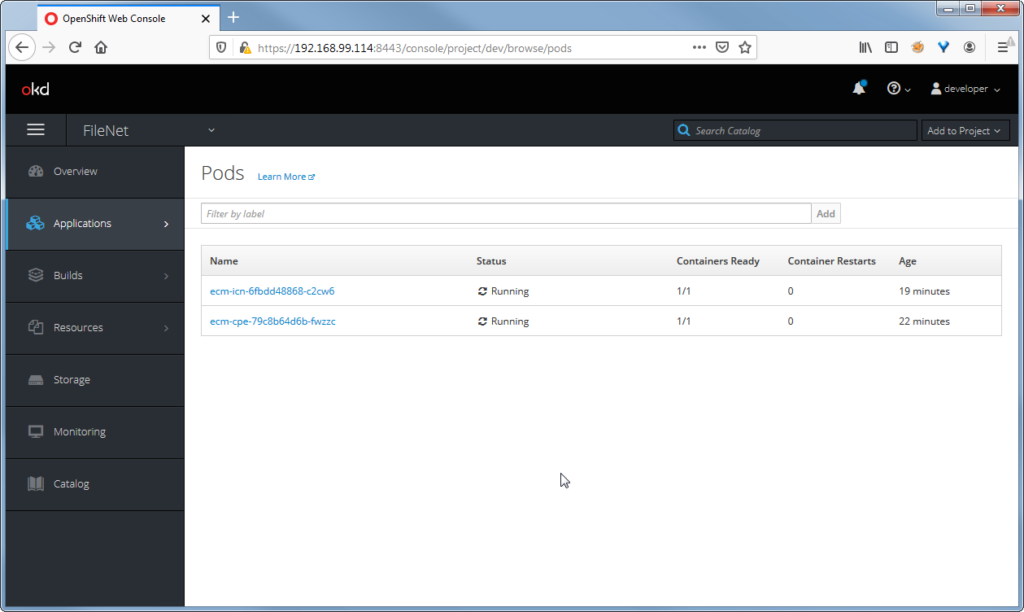

OpenShift project

The OpenShift project is created, and there is actually 2 pods running on it.

The first on is related to IBM Content Platform Engine.

And the second one is running IBM Content Navigator !

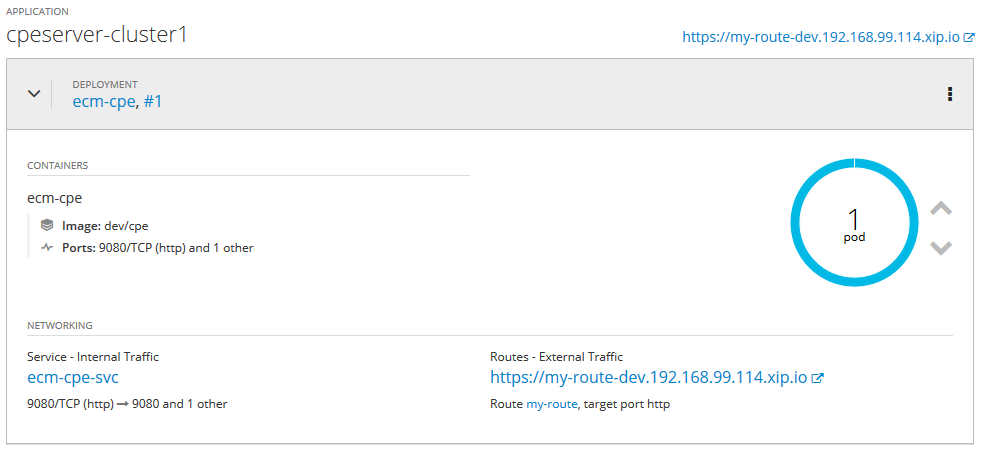

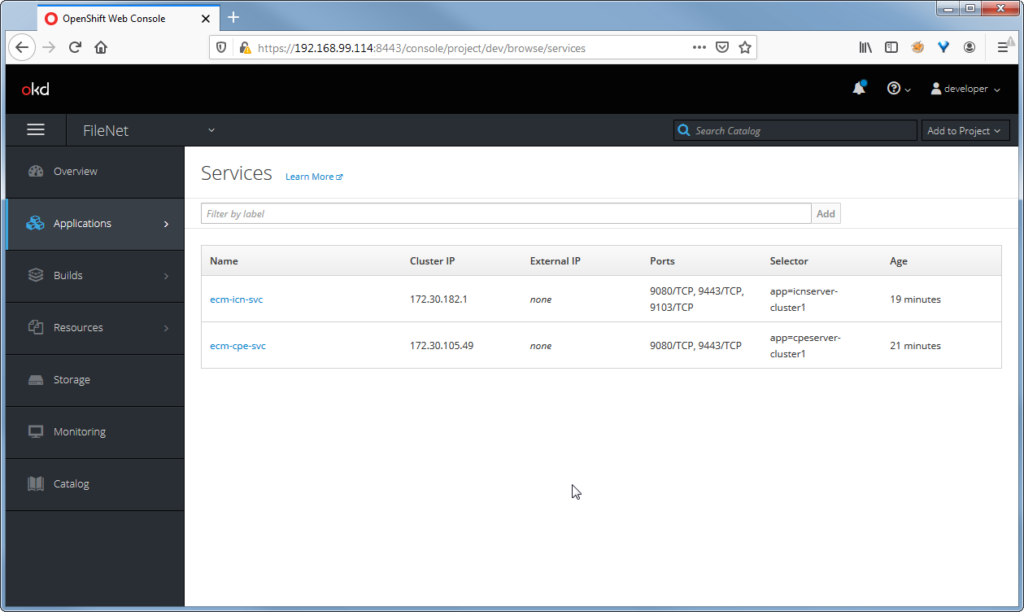

All services are up and running.

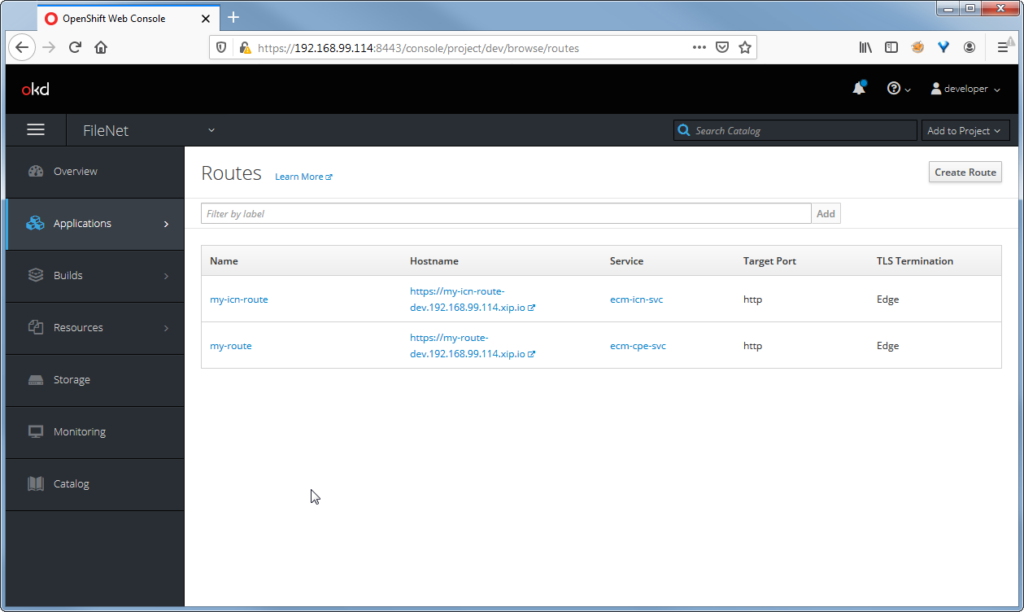

Routes are declared

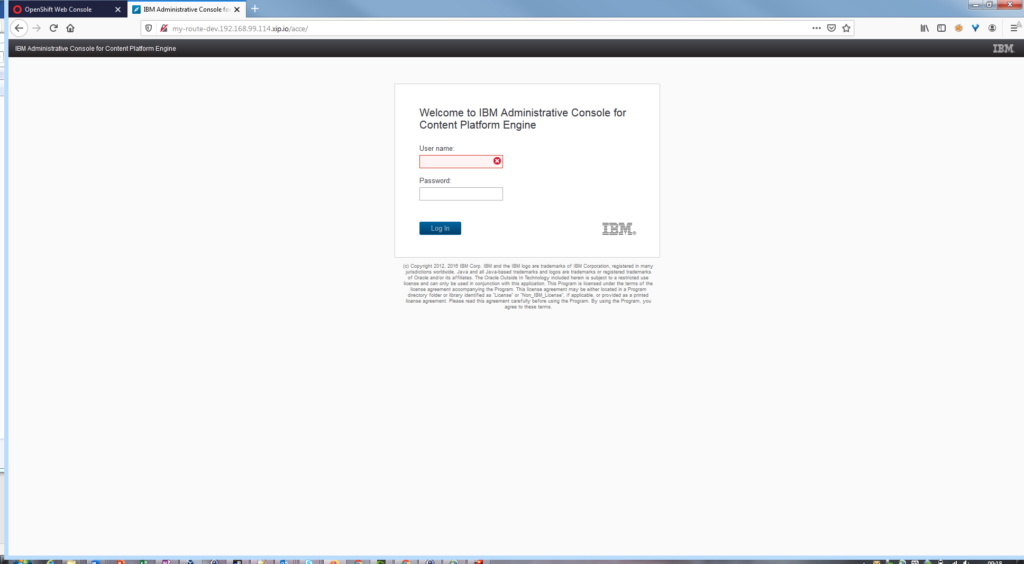

Accessing IBM Content Platform Engine

Using the associated route, I’m able to connect to the IBM Administration Console for Content Engine.

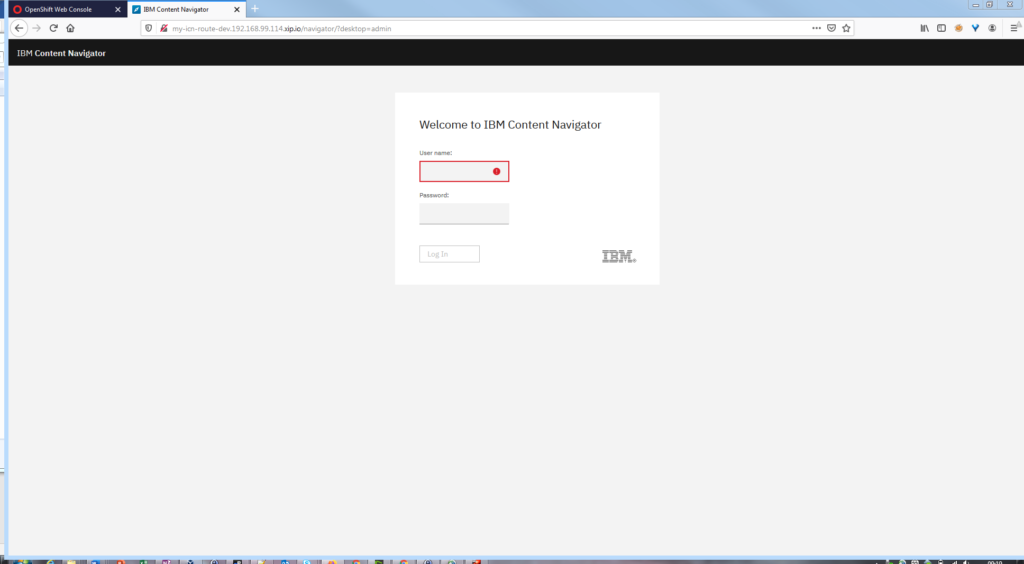

Accessing IBM Content Navigator

Using the second route, I’m able to connect to IBM Content Navigator !

What to do next ?

You can now create a domain and an objectstore using the ACCE, and configure the associated desktop on IBM Content Navigator.

THings to know

You can configure each route to be queried using ssl protocol. Very useful on production environment.

All available volumes to store documents, “/home/worker/cpefilestore/” directory locally, will be available in the “/opt/ibm/asa” directory on container side.

All your IBM Content Navigator plugins, stored in the “/home/worker/icnpluginstore/” directory on my side, will be available in the “/opt/ibm/plugins” directory on container side.

Hello Buddy

When I looked at the paperwork, I was really excited. It is really awesome.

Could you perhaps do a training session for me on IBM FILENET/IBM BAW deployments using the RHOCP environment? (containerization)